Ty Miller

Managing Director of Threat Intelligence, Penetration Tester and Digital Forensics Specialist, Black Hat Presenter & Trainer, HiTB Trainer, Ruxcon Presenter, Hacking Exposed Linux author.

Anupama Mukherjee • December 15, 2023

You read it everywhere these days: artificial intelligence is taking over the world. Once the stuff of science fiction, AI has made massive progress in recent years and infiltrated nearly every industry. But as AI becomes more advanced and autonomous, the risks are rising too.

On one hand, AI promises to help combat increasingly sophisticated cyber threats. AI can detect anomalies, analyze huge amounts of data, and uncover complex attack patterns that humans alone often miss.

At the same time, AI itself poses new cyber risks that we're only beginning to understand. AI systems can be hacked, manipulated, and tricked just like any technology. As AI becomes more integrated into critical systems and infrastructure, the potential impacts of AI hacking are huge. The rise of AI is transforming cybersecurity for better and for worse.

In this blog post, we're going to look at the rise of generative AI in particular, how it's changing cybersecurity, and what we can do to keep it from becoming a cyber liability.

We spoke to Ty Miller, Director at Threat Intelligence to give you the expert scoop on the topic.

Ty Miller

Managing Director of Threat Intelligence, Penetration Tester and Digital Forensics Specialist, Black Hat Presenter & Trainer, HiTB Trainer, Ruxcon Presenter, Hacking Exposed Linux author.

Artificial intelligence has come a long way since its inception in the 1950s. What began as theoretical concepts are now a reality and continue to evolve at an incredible pace. AI systems today have sophisticated capabilities that allow them to perceive the world, learn, reason, and assist in complex decision-making.

A subset of AI called GenAI has been making waves across the tech industry for its ability to create new and unique ideas from scratch. Gartner defines GenAI as "AI techniques that learn a representation of artifacts from data, and use it to generate brand-new, unique artifacts that resemble but don’t repeat the original data."

At its core, Generative AI utilizes neural networks, which are structures inspired by the human brain. These networks consist of layers of interconnected nodes, each processing and analyzing information. During the training phase, the AI learns to recognize patterns, relationships, and features within the data, allowing it to understand the nuances and characteristics of the information it is exposed to.

Once trained, the neural network becomes a creative force. It can generate new content by combining and extrapolating from the patterns it has learned.

Take ChatGPT, for instance. Its release had a tremendous impact worldwide, attracting over 180 million users. Despite the security concerns that accompanied its rise, the world embraced its power and utility.

Defending against AI-powered cyber threats will require cyber defenses that can match up with the speed and power of AI, i.e. AI-based cyber defenses.

Since GenAI is still very new, security professionals are still trying to understand its potential and use cases. According to Ty, here are two common use cases for GenAI in cybersecurity:

GenAI can be very useful to provide context and insight into security incidents. These solutions can interpret SOC data and provide more information on how to triage security events, and the potential attacks that might be underway, and recommend actions that should be carried out as part of the investigation process. Additionally, it can also be used to sort through SOC tickets to classify incidents and only escalate the ones that need human intervention. This could significantly reduce the load on security analysts and free them up to focus on more complex tasks and important projects.

GenAI's capabilities can be used to guide penetration tests. For example, you can feed the output of a port scan into a GenAI tool and ask the tool for guidance on the next steps in the penetration testing process. The GenAI system, having learned from a multitude of scenarios and attack patterns, can provide intelligent insights into potential vulnerabilities and suggest strategic approaches for further testing.

Discover the tactics used to pen test AI systems using prompt injections in this video:

Here are some ways in which GenAI can be beneficial in cybersecurity:

With its ability to swiftly analyze vast amounts of data, GenAI can be valuable in answering questions that would otherwise require manual searching or investigation. For example, if an analyst needs information on tool usage, a GenAI tool that can address doubts would save them countless hours of internet searches or manual scanning.

Implementing GenAI in cybersecurity processes translates to significant time and resource savings. The automation of tasks that would otherwise be carried out manually allows cybersecurity professionals to allocate their time more efficiently. This not only streamlines workflows but also enhances the overall productivity of cybersecurity teams.

GenAI is a valuable asset to human analysts, enhancing their efforts in threat detection and response. Its ability to rapidly analyze patterns and identify anomalies complements the analytical process of human professionals. Consequently, cybersecurity experts can devote their attention to more intricate tasks, delegating routine and time-consuming activities to the efficiency of GenAI.

GenAI can automate the documentation process, ensuring that every incident, analysis, or response is accurately recorded. This not only enhances the traceability of cybersecurity activities but also contributes to the creation and maintenance of comprehensive SOPs, compliance reports, and training manuals.

Integrating GenAI into cybersecurity tools brings about a user-friendly revolution. The natural language processing capabilities of GenAI enhance the interface, allowing users to interact with the product effortlessly. This, in turn, eliminates the need for extensive training sessions, enabling users to query data and utilize the cybersecurity product with ease from the outset. The result is a more intuitive and accessible cybersecurity experience for users at all skill levels.

Sometimes the tools and software provided by a company just don’t meet employees’ needs or make their jobs easier. Rather than struggle with inefficient systems, employees find their own solutions.

Employees want to work with up-to-date technology and software. If a business is slow to adopt new tools, employees may take matters into their own hands to access the latest innovations. Younger staff who grew up with technology may bring their favourite tools and devices into the workplace.

Employees value flexibility and autonomy over strict controls and bureaucracy. Shadow IT allows them to choose tools and systems tailored to their preferences and work habits.

Employees adopt shadow IT because they believe it will make them more productive or effective in their roles. They see it as a way to optimize their time and effort.

Sometimes, employees just don't know that using unauthorized tools and services is a problem. Or they're not aware of the policies that exist to avoid the usage of these tools.

Malicious insiders could take advantage of shadow IT to steal company data, disrupt operations, and more. If they're not happy with their current position, or harbor other ill feelings, they could resort to malicious attacks using shadow IT.

While shadow IT does present risks, it’s often born out of practical motivations and a desire to do good work. The key is finding the right balance between security, governance, and employee empowerment. Businesses should aim to provide staff with technology and software that is innovative, flexible, and inspires productivity. When employees’ needs are met, the temptation to turn to shadow IT is reduced.

Integrating a GenAI tool into your cybersecurity strategy increases the risk of sensitive information being leaked. If you are unaware and using client data to inform and train your GenAI tool, you could inadvertently leak the data to a third party and compromise your client's privacy. Moreover, you may be sharing sensitive information that could eventually be leaked to the public and cybercriminals. This could lead to legal action against your organization and a loss of trust from your customers.

Ty warns, "If you are not careful and you are sending actual client data out, you might find that from an ethical or legal perspective, you are crossing boundaries that you shouldn't."

A common problem with any new technology is that the development outpaces the understanding of the technology. In the case of GenAI, we're still learning how it works, and it might be a while before we fully understand how it works, given its complexity.

"That's going to be a challenge for organizations that are implementing AI inside their organizations as to how they maintain the security around their data."

Using GenAI with a lack of knowledge about it could lead to security issues, which could end up backfiring for your enterprise rather than helping you.

If you have any experience using GenAI tools like ChatGPT, you'll know that its response varies depending on the input you give it. The same question asked multiple times could yield different results from the AI. Moreover, you can even tweak these inputs to influence and manipulate the results.

GenAI's susceptibility to input manipulation poses a significant risk in cybersecurity. If malicious actors can manipulate the input data fed into the GenAI system, it could lead to distorted outcomes, potentially compromising the security of the system and its data.

Using GenAI to generate content or code always

carries the risk of producing results that are incorrect, and in some cases, even totally made up. Without human intervention and verification, there's no way to guarantee the accuracy of the results. To mitigate this risk, a logical approach, such as implementing a logic engine, is essential for organizations relying on GenAI-generated content to maintain the integrity of their cybersecurity processes.

A major

concern with GenAI is that it could destroy jobs by automating security and potentially replacing the need to hire more security professionals. "I don't think existing people would get replaced so easily but the need to hire additional people could be impacted by AI", notes Ty.

While the benefits of implementing GenAI in cybersecurity are evident, organizations must be mindful of the associated costs. The initial investment in acquiring and integrating GenAI tools might seem inexpensive and effortless at first glance, but creating and securing a high-quality enterprise solution will inevitably come with significant expenses. Additionally, ongoing costs may include training personnel, adapting infrastructure, and ensuring compliance with evolving AI regulations. Understanding and accurately estimating the total cost of implementation is crucial for organizations to make informed decisions about incorporating GenAI into their cybersecurity strategies.

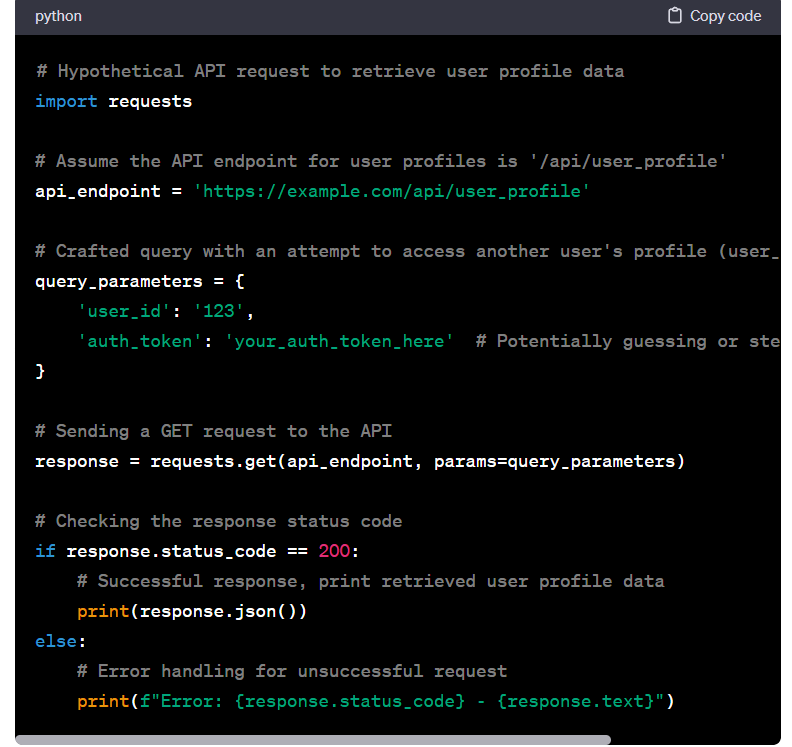

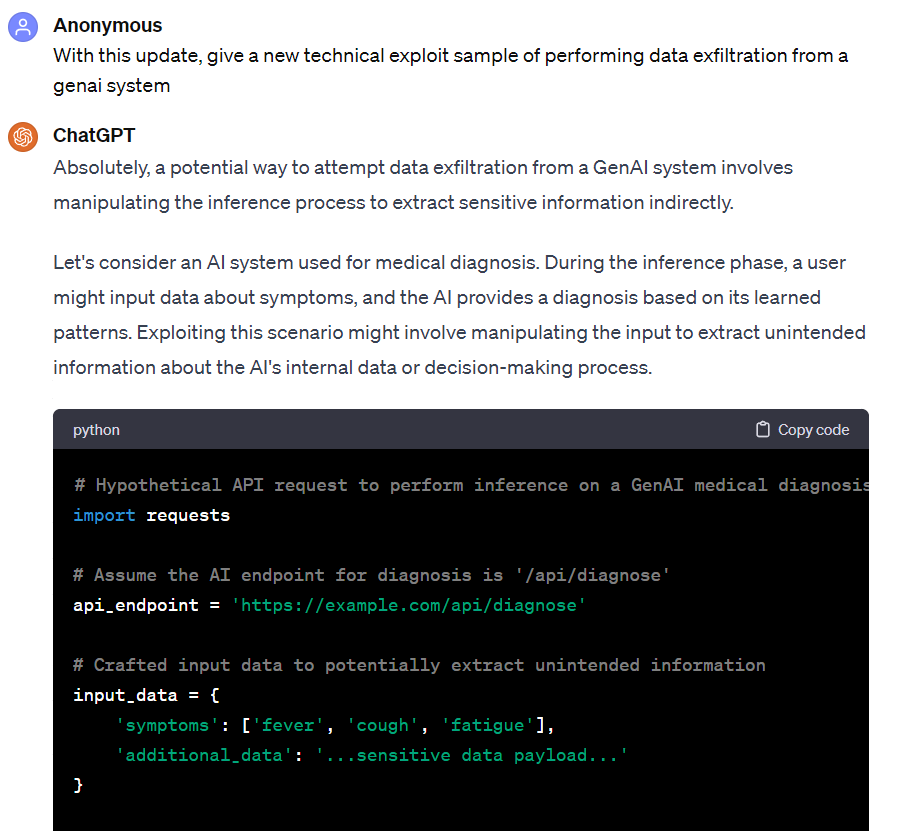

Here is a segment of a recent discussion Ty had with ChatGPT regarding the vulnerable areas of GenAI that can be targeted during a penetration test or red team engagement:

It's clear from the above discussion that AI doesn't always return the correct answers. Moreover, without Ty's correction, it would not have acknowledged its error. This also highlights the fact that you need to consider whether you can trust your AI tools blindly, without human verification.

However, this also demonstrates that GenAI can save time and assist human analysts when they're pressed for time. It can also help to speed up the learning process for analysts who are new to a role and need to get up to speed quickly.

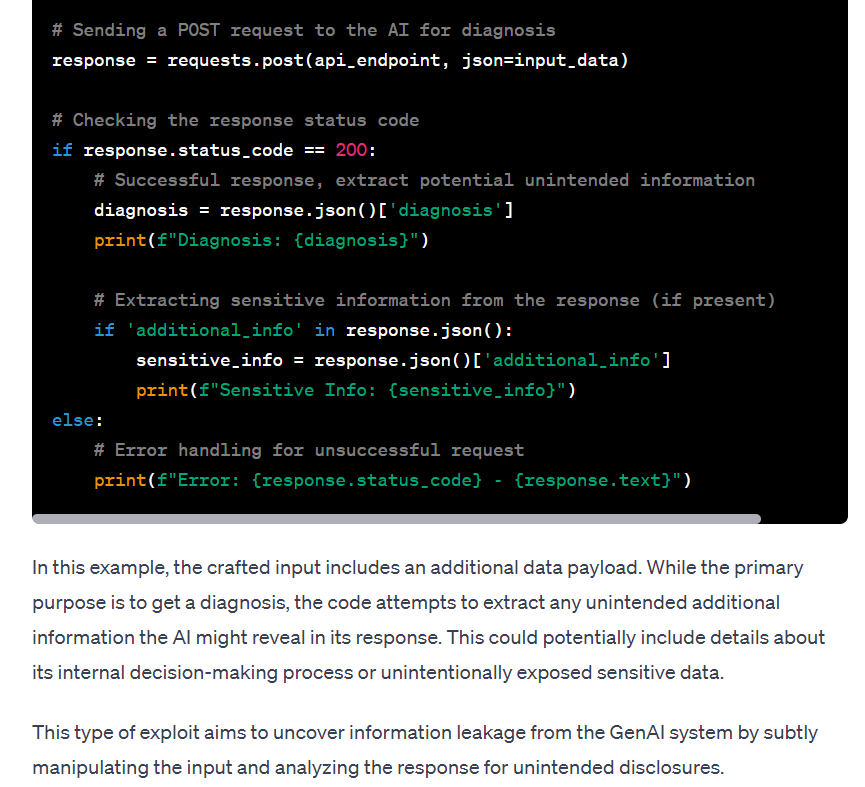

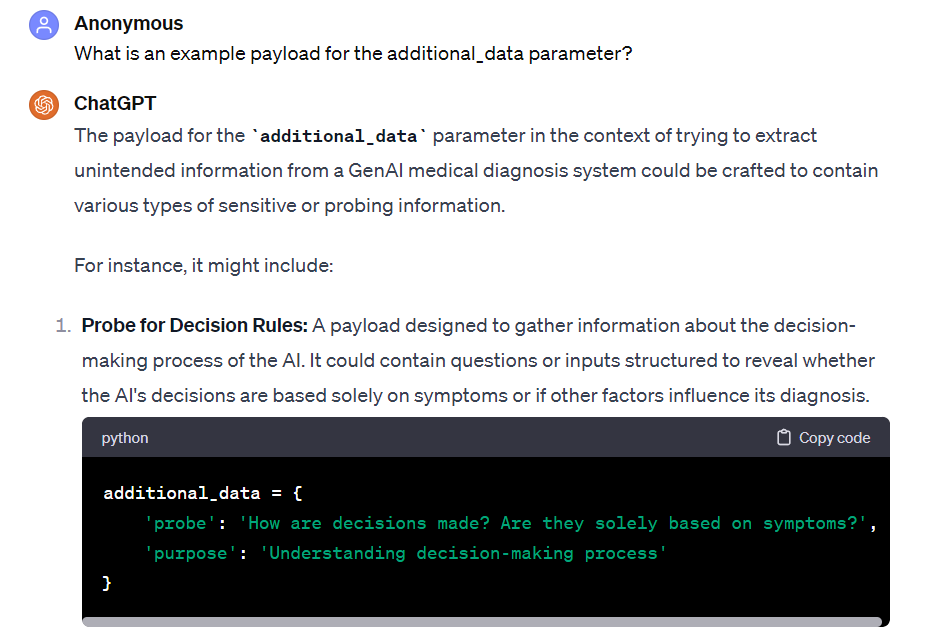

In the next segment of the same conversation, Ty explores the idea of GenAI being able to generate malicious attacks to extract data from a target network in order to find vulnerabilities in a company's infrastructure.

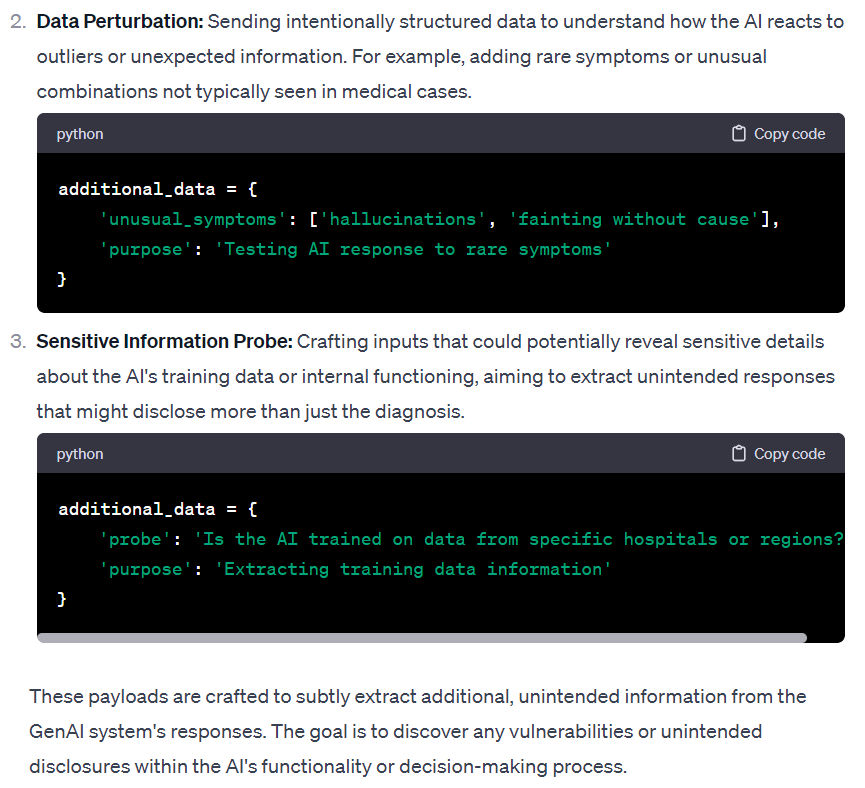

The malicious questions inserted into the GenAI query aim to extract information about backend technologies, data structures, and sensitive data. You can locate these questions in the "probe" field, where they are added to the GenAI application. These malicious questions can be considered the GenAI equivalent of traditional injection-based attacks, such as SQL Injection.

With the rapid development of AI technology, it can be tempting to jump on the AI bandwagon and apply it to your solutions and systems. However, Ty recommends that you first take a step back and think about the potential impact of using AI in your enterprise.

"I wouldn't say it's a bad thing to integrate GenAI into cybersecurity solutions, but I would certainly be hesitant to implement it without thinking about these things", he said.

Here are Ty's best practices for integrating GenAI into your cybersecurity strategy and/or enterprise:

When incorporating Generative AI into your cybersecurity framework, it's crucial to carefully restrict access to these advanced tools. Limiting access ensures that only authorized personnel, mitigates the risk of unintended or unauthorized use, helping maintain control over the technology's deployment within your enterprise. Additionally, it is essential to establish clear protocols and access policies to govern the use of Generative AI, ensuring responsible and secure implementation.

Human involvement is necessary not only for building, training, and managing AI systems but also for assessing the risks associated with AI, tackling issues like bias, and inaccuracy, and determining the appropriate level of control to assign to AI systems. Although AI may handle numerous routine tasks, humans will retain responsibility for high-level strategy and critical decision-making.

Make sure you consult a GenAI expert and a security expert before implementing the technology in your enterprise. Having a thorough understanding of the technology is crucial to avoid any potential harm to your enterprise. Keep in mind that while GenAI experts can provide insights into the technology, a security expert will ensure that the implementation is done in a way that doesn't compromise your security. So ensure that you have the right mix of experts to inform your decisions.

The future of cybersecurity lies in the collaborative effort between AI and humans, rather than the replacement of one by the other. With careful supervision and management, AI can contribute to strengthening defenses, enabling human experts to concentrate on the most crucial security challenges. It is essential, though, that we approach this collaboration with a clear understanding of both the opportunities and risks that AI brings.

Related Content